Deep Visual Navigation under Partial Observability

How do humans navigate? We navigate with almost exclusive visual sensing and coarse floor plans. To reach a destination, we demonstrate a diverse set of skills, such as obstacle avoidance, and we use tools, such as buses and elevators, to traverse through different locations. All these are not yet possible for robots. To get closer to human-level navigation performance, we propose a controller that is capable of learning complex policies from data.

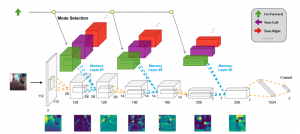

DECISION: Deep rEcurrent Controller for vISual navigatiON

B. Ai, W. Gao, Vinay, and D. Hsu. Deep Visual Navigation under Partial Observability. In International Conference on Robotics and Automation (ICRA), 2022. [paper][code][video]

The key lies in designing the structural components that allow the controller to learn the desired capabilities. Here we identified three challenges:

(i) Partial observability: The robot may not see blind-spot objects, or is unable to detect features of interest, e.g., the intention of a moving pedestrian.

(ii) Multimodal behaviors: Human navigation behaviors are multimodal in nature, and the behaviors are dependent on both local environments and the high-level navigation objective.

(iii) Visual complexity: Due to the high dimensionality of raw pixels, different scenes could appear dramatically different across environments, which makes traditional model-based approaches brittle.

To resolve the challenges, we propose two key structure designs

(i) Multi-scale temporal modeling: We use spatial memory modules to capture both low-level motions and high-level temporal semantics that are useful to the control. The rich history information can compensate for the partial observations.

(ii) Multimodal actions: We extend the idea of Mixture Density Networks (MDNs) to temporal reasoning. Specifically, we use independent memory modules for different modes to preserve the distinction of modes.

We collected a real-world human demonstration dataset consisting of 410K timesteps and train the controller end-to-end. Our DECISION controller significantly outperforms CNNs and LSTMs.

The controller was first deployed on our Boston Dynamics Spot robot in April 2022. It has been navigating autonomously for more than 150km at the time of writing. It has been incrementally improved over time, as an integral component of the GoAnywhere@NUS project.

One feature of this work is that all experimental results are obtained in the real world. Here is our demo video, showing our Spot robot traversing many different locations on our university campus.