We aim at robust reinforcement learning (RL) for tasks with complex partial observations. While existing RL algorithms have achieved great success in simulated environments, such as Atari games, Go, and even Dota, generalizing them to realistic environments with complex partial observations remains challenging. The key to our approach is to learn from the partial observations a robust and compact latent state representation. Specifically, we handle partial observability by combining the particle filter algorithm with recurrent neural networks. We tackle complex observations through efficient discriminative model learning, which focuses on learning observations required for action selection rather the entire high-dimensional observation space.

Particle Filter Recurrent Neural Networks

X. Ma, P. Karkus, D. Hsu, and W.S. Lee. Particle filter recurrent neural networks. In Proc. AAAI Conf. on Artificial Intelligence, 2020. [PDF][Code]

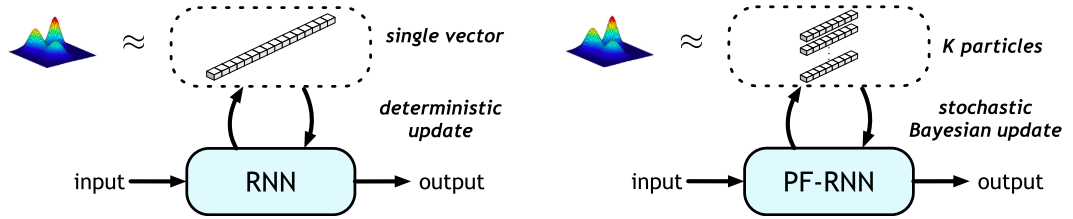

Recurrent neural networks (RNNs) have been extraordinarily successful for prediction with sequential data. To tackle highly variable and noisy real-world data, we introduce Particle Filter Recurrent Neural Networks (PF-RNNs), a new RNN family that explicitly models uncertainty in its internal structure: while an RNN relies on a long, deterministic latent state vector, a PF-RNN maintains a latent state distribution, approximated as a set of particles. For effective learning, we provide a fully differentiable particle filter algorithm that updates the PF-RNN latent state distribution according to the Bayes rule. Experiments demonstrate that the proposed PF-RNNs outperform the corresponding standard gated RNNs on a synthetic robot localization dataset and 10 real-world sequence prediction datasets for text classification, stock price prediction, etc.

Discriminative Particle Filter Reinforcement Learning

X. Ma, P. Karkus, D. Hsu, W.S. Lee, and N. Ye. Discriminative particle filter reinforcement learning for complex partial observations. In Proc. Int. Conf. on Learning Representations, 2020. [PDF][Project][Code]

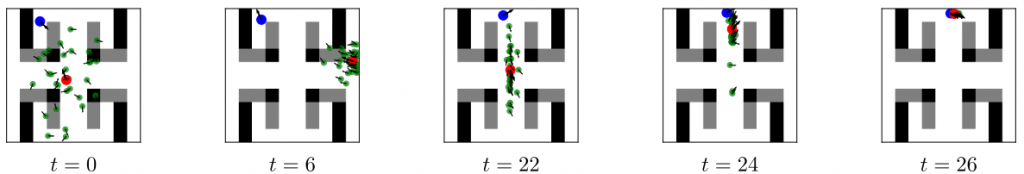

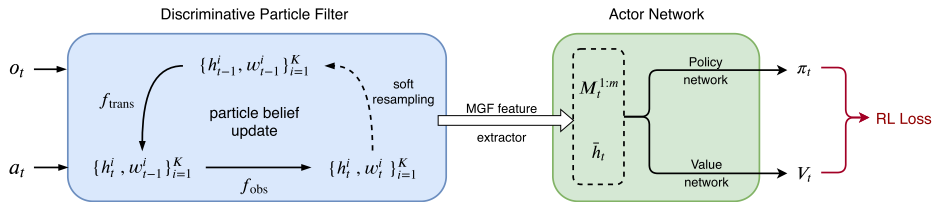

Real-world decision making often requires reasoning in a partially observable environment using information obtained from complex visual observations — major challenges for deep reinforcement learning. In this paper, we introduce the Discriminative Particle Filter Reinforcement Learning (DPFRL), a reinforcement learning method that encodes a particle filter structure with learned discriminative transition and observation models in a neural network. The particle filter structure allows for reasoning with partial observations, and discriminative parameterization allows modeling only the information in the complex observations that are relevant for decision making. In experiments, we show that in most cases DPFRL outperforms state-of-the-art POMDP RL models in Flickering Atari Games, an existing POMDP RL benchmark, as well as in Natural Flickering Atari Games, a new, more challenging POMDP RL benchmark that we introduce. We also show that DPFRL performs well when applied to a visual navigation domain with real-world data.

Contrastive Variational Reinforcement Learning

X. Ma, S. Chen, D. Hsu and W.S. Lee. Contrastive variational reinforcement learning for complex observations. In Proc. 4th Conf. on Robot Learning, 2020. [PDF][Project][Code]

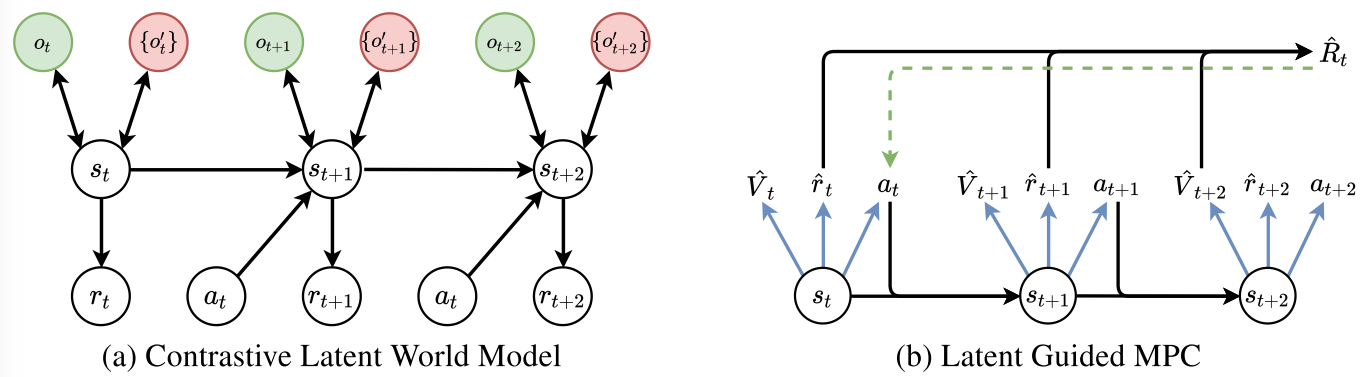

Deep reinforcement learning (DRL) has achieved significant success in various robot tasks: manipulation, navigation, etc. However, complex visual observations in natural environments remains a major challenge. This paper presents Contrastive Variational Reinforcement Learning (CVRL), a model-based method that tackles complex visual observations in DRL. CVRL learns a contrastive variational model by maximizing the mutual information between latent states and observations discriminatively, through contrastive learning. It avoids modeling the complex observation space unnecessarily, as the commonly used generative observation model often does, and is significantly more robust. CVRL achieves comparable performance with state-of-the-art model-based DRL methods on standard Mujoco tasks. It significantly outperforms them on Natural Mujoco tasks and a robot box-pushing task with complex observations, e.g., dynamic shadows.